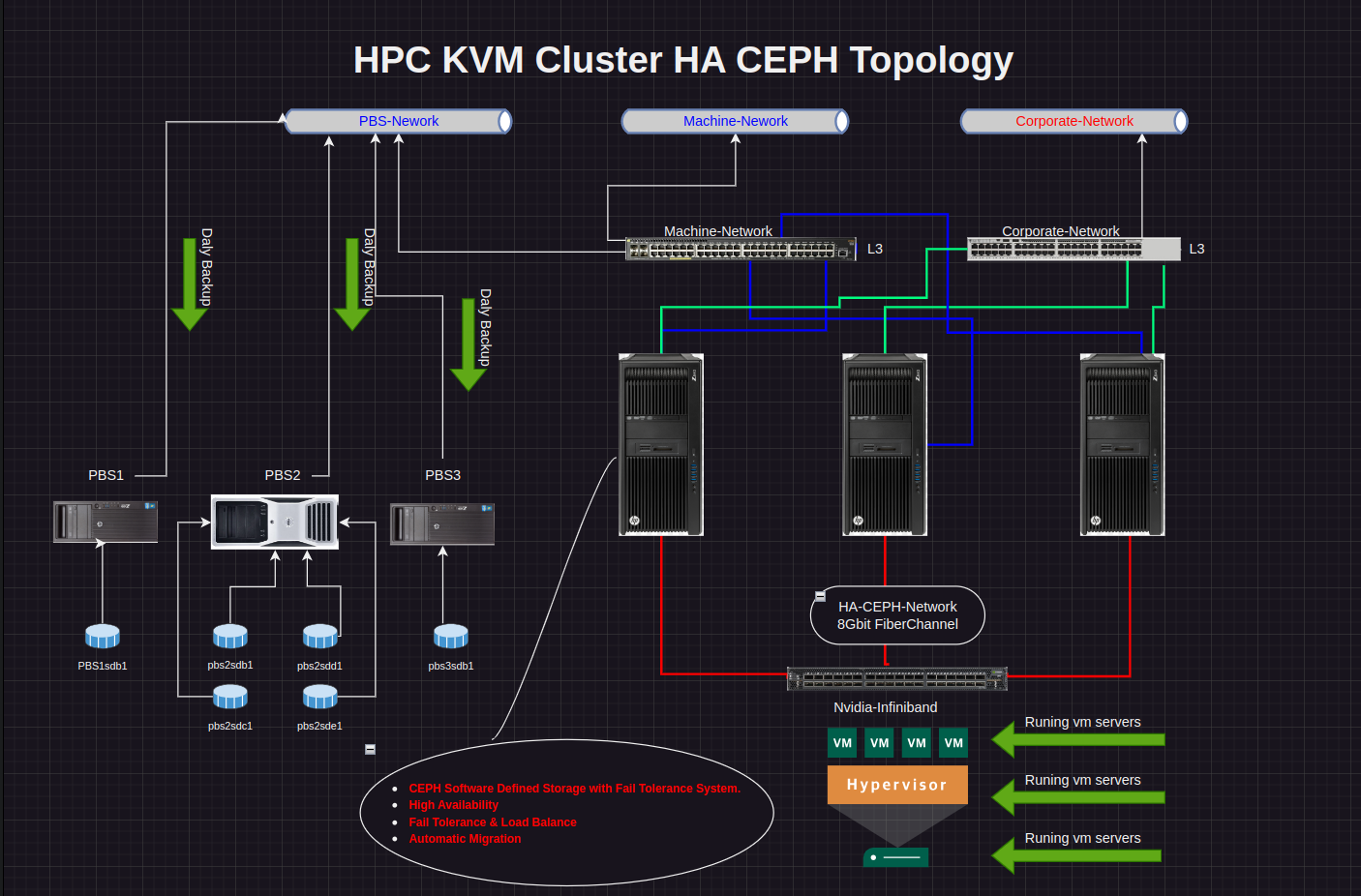

Information: The CEPH Cluster consists of 3 HPC nodes and one Infiniband switch. Each HPC Ceph node has 4 x 4TB SSD disks. OSD replication occurs on this switch, which has an 8 Gbit bandwidth. Corporate, Machine, and PBS networks access the cluster through different VLANs. The LACP (802.3ad) protocol is used for Corporate and other VLANs, providing access through the bridged network. Below are the installation and configuration details for the cluster.

The hardware used in this setup includes:

- hpc node: HP Z840 Workstation (Per hpc-node 72core cpu and 256gb ram)

- CEPH Network: Nvidia Infiniband Mellanox ConnectX-3 Switch 8Gbit

- OSD: 12 x 4TB SSDs

- Access Network: 6 x 1 Gbit access network interface cards.

Important: We are aware that the products we have are “end of life.” However, since the disks and network cards inside are brand new, we believe they will be beneficial for a while longer. At least until we acquire new hardware, you can consider utilizing the unused high-performance equipment you have.

Installations proxmox 8.2 on hpc-node1, hpc-node2, hpc-node3 machine

Change settings of network interface

Create a management access network and set the IP address. It should be in virtual bridge mode. You can do this from the GUI or the console. Add your management network as follows.

Before configuring the CEPH network, the Mellanox ConnectX-3 driver and opensm modules need to be installed. After the installation, the #CEPH network settings should be configured as shown below. You can also see the other network settings.

- apt update && apt upgrade -y

- apt install opensm pve-headers -y

- modprobe ib_ipoib

- echo “ib_ipoib” >> /etc/modules-load.d/infiniband.conf

- systemctl enable opensm

- systemctl restart opensm

nano or vi /etc/network/interfaces for all nodes

auto ibs5

iface ibs5 inet static

address 10.40.10.11/24

mtu 65520

pre-up modprobe ib_ipoib

pre-up modprobe ib_umad

pre-up echo connected > /sys/class/net/ibs5/mode

#CEPH

auto bond0

iface bond0 inet manual

bond-slaves ens1f1 ens1f2 ens6f1 ens6f2

bond-miimon 100

bond-mode 802.3ad

bond-xmit-hash-policy layer2

#Corporate-Bonding

auto bond1

iface bond1 inet manual

bond-slaves ens1f0 ens1f3 ens6f0 ens6f3

bond-miimon 100

bond-mode 802.3ad

bond-xmit-hash-policy layer2

#Production-Bonding

auto bond2

iface bond2 inet static

address 10.1.2.11/24

bond-slaves enp9s0

bond-miimon 100

bond-mode active-backup

bond-primary enp9s0

#PBS

auto vmbr0

iface vmbr0 inet static

address 10.64.35.101/24

gateway 10.64.35.1

bridge-ports eno1

bridge-stp off

bridge-fd 0

#Management

auto vmbr1

iface vmbr1 inet manual

bridge-ports bond1

bridge-stp off

bridge-fd 0

bridge-vlan-aware yes

bridge-vids 2-4094

#Production-Access

auto vmbr2

iface vmbr2 inet manual

bridge-ports bond0

bridge-stp off

bridge-fd 0

bridge-vlan-aware yes

bridge-vids 2-4094

#Corporate-Access

source /etc/network/interfaces.d/*

The first step is to create a cluster. I am naming the cluster “hpc-cluster”. You can do this via the GUI or CLI.

Create Cluster on hpc-node1

pvecm create hpc-clusterpvecm status (You can check the status of the cluster.)

Add hpc-node2 to the cluster.

pvecm add IP-ADDRESS-CLUSTER

Add hpc-node3 to the cluster.

pvecm add IP-ADDRESS-CLUSTER Example GUI;

Members of PVE Cluster

I recommend setting up the cluster structure with the management network.

CEPH Cluster installation and management

After completing the network and cluster settings, the next step is to install and activate CEPH on all nodes. It’s important to note that any server not utilizing a CEPH module should not be included in the cluster environment.

Ceph installation on the cli;

pveceph install pveceph init --network 10.40.10.0/24 pveceph mon create pveceph mgr create pveceph osd create /dev/sd[X] pveceph pool create hpc-pool --add_storages

pveceph init –network 10.40.10.0/24 #ceph network configuration for Nvidia Infiniband switch 8Gbit

pveceph mon create #The Ceph monitor needs to be installed on each node. These monitors store metadata information and synchronize with each other.

pveceph mgr create #Multiple Ceph managers can be installed, but only one will be active at a time. If you install more than one Ceph manager, the Ceph dashboard will not work. After installing the Ceph manager, a default pool will be created. If the server with the Ceph manager fails for any reason, you can immediately designate another HPC node as the Ceph manager.

pveceph osd create /dev/sd[X] #Create an OSD for each disk. For clarity, configure them one by one across the HPC nodes. This configuration will make it easier for you when creating a pool

pveceph pool create hpc-pool –add_storages #Create the pool with default values. Leave the PG Autoscale mode set to “on.” This way, Proxmox will automatically calculate and distribute PG objects to the OSDs.

- #on: Enable automated adjustments of the PG count for the given pool.

- #off: Disable autoscaling for this pool. It is up to the administrator to choose an appropriate pgp_num for each pool. (If this option is chosen, you need to calculate the number of disks in your PG structure and how many objects should be on these disks. Accurate calculation is very important. If you proceed with an incorrect calculation, your objects will be incompletely copied to the OSDs.)

- #warn: If set to warn, it produces a warning message when a pool has a non-optimal PG count

- #size: The number of replicas per object is included and is set to 3 by default.

- #min.size: It is the minimum number of replicas per object. PG will not send fewer replicas to the OSDs than the selected value.

- #of Pgs: It matches the number of PGs created within the pool with objects and assigns them to the OSDs. If you want to set the number of PGs manually, it needs to be calculated correctly based on the number of OSDs and the CPU load. Proxmox sets the default value at 128 PGs and will adjust this value for the pool created automatically.

- #Crush rule: It is a rule that automatically organizes the placement of objects within the cluster.

Ceph installation on the GUI hpc-node[1,2,3] > CEPH > Install hpc-node[1,2,3] > CEPH > Monitor > Create Monitor hpc-node[1] > CEPH > Monitor > Create Manager #if you install ceph-dashboard hpc-node[1,2,3] > CEPH > OSD > Create OSD hpc-node[1,2,3] > CEPH > Pools > Create pool

CEPH DASHBOARD

CEPH DASHBOARD

CEPH OSD

CEPH POOL

Create HA for all virtual machine on GUI

- First create HA Group

- You can set an HA with a priority that suits your structure.

- You can add a VM from the resources section. Here you can set what action the VM will take during HA and how it will act in the created groups.

CREATE HA GROUP

Add Resources vm for HA

HA Status

Test Migration a VM

Success!!! Finally, let’s briefly summarize:

- We set up three Proxmox installations for the CEPH Cluster.

- We created the Cluster over the management network.

- We installed CEPH for each Proxmox server via the NVIDIA Mellanox Infiniband switch.

- We updated the Monitor, Management, OSD, and POOL settings within CEPH for each Proxmox.

- After deploying the virtual servers, we included them in the HA.

Resources;

https://docs.ceph.com/en/reef/rados/operations/placement-groups/

https://pve.proxmox.com/wiki/Deploy_Hyper-Converged_Ceph_Cluster

No responses yet